1.设置方式

https://blog.51cto.com/u_16213328/7866422

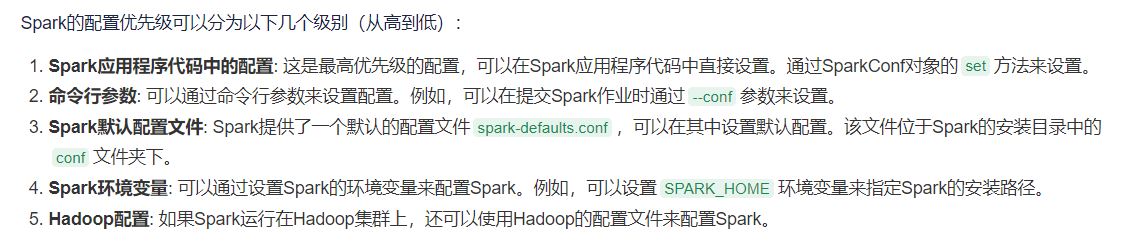

优先级

hadoop设置???

2.代码中设置(SparkSession、SparkContext、HiveContext、SQLContext)

https://blog.csdn.net/weixin_43648241/article/details/108917865

SparkSession > SparkContext > HiveContext > SQLContext

SparkSession包含SparkContext

SparkContext包含HiveContext

HiveContext包含SQLContext

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| SparkSession.builder.\

config("hive.metastore.uris", "thrift://xxx.xx.x.xx:xxxx").\

config("spark.pyspark.python", "/opt/dm_python3/bin/python").\

config('spark.default.parallelism ', 10 ).\

config('spark.sql.shuffle.partitions', 200 ).\

config("spark.driver.maxResultSize", "16g").\

config("spark.port.maxRetries", "100").\

config("spark.driver.memory","16g").\

config("spark.yarn.queue", "dcp" ).\

config("spark.executor.memory", "16g" ).\

config( "spark.executor.cores", 20).\

config("spark.files", addfile).\

config( "spark.executor.instances", 6 ).\

config("spark.speculation", False).\

config( "spark.submit.pyFiles", zipfile).\

appName("testing").\

master("yarn").\

enableHiveSupport().\

getOrCreate()

|