看不同layer,不同head的attention

注意:

1 | from bertviz.neuron_view import show |

看不同layer,不同head的attention

注意:

1 | from bertviz.neuron_view import show |

https://huggingface.co/docs/transformers/task_summary Language Modeling

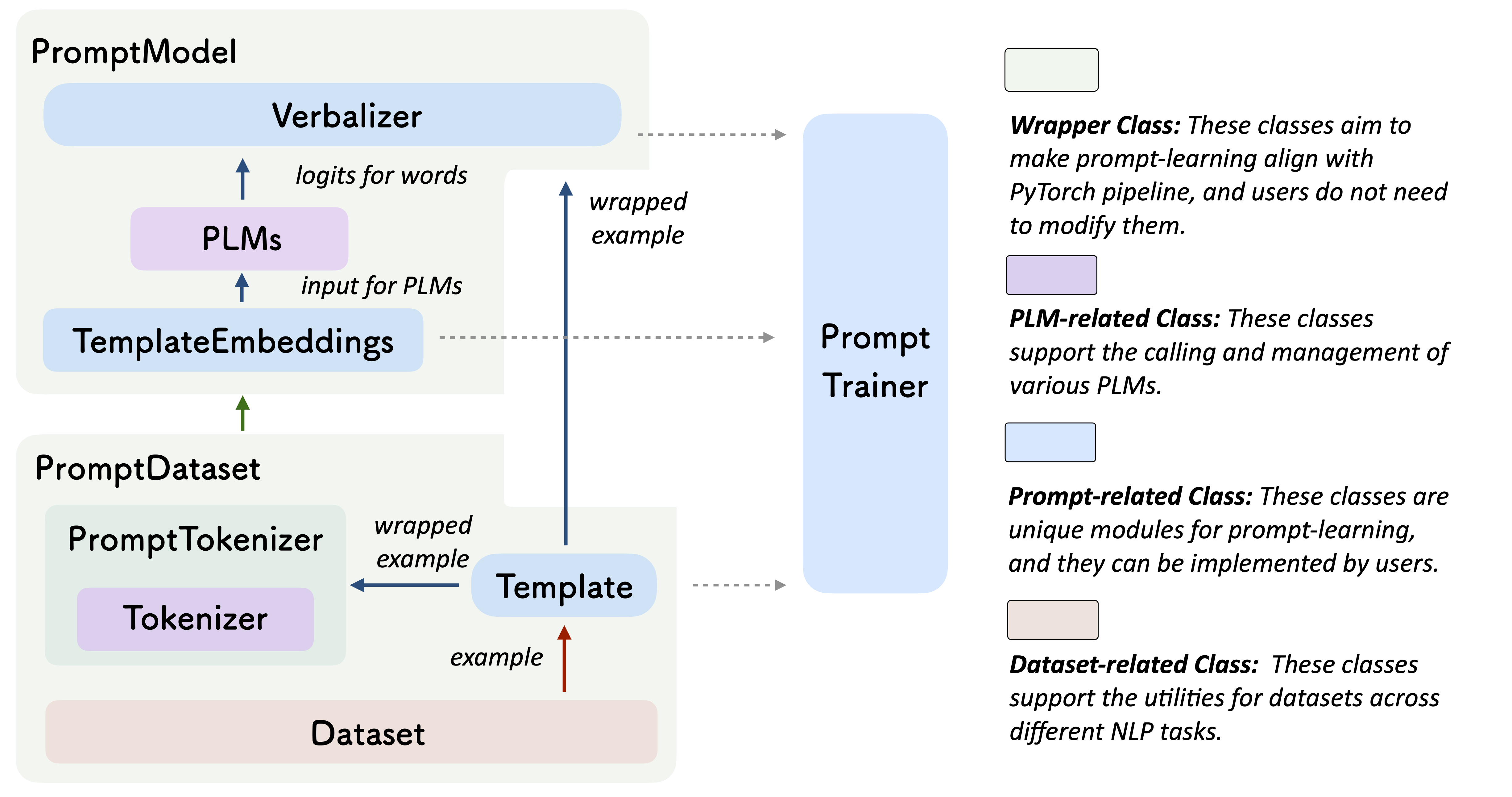

清华NLP实验室推出OpenPrompt开源工具包

可以参考官方https://hub.fastgit.xyz/thunlp/OpenPrompt

有详细的步骤和case

https://hub.fastgit.xyz/thunlp/OpenPrompt

NLP小帮手,huggingface的transformer

git: https://github.com/huggingface/transformers

paper: https://arxiv.org/abs/1910.03771v5

整体结构

简单教程:

https://blog.csdn.net/weixin_44614687/article/details/106800244

底层为load_state_dict

1 | Some weights of the model checkpoint at ../../../../test/data/chinese-roberta-wwm-ext were not used when initializing listnet_bert: ['cls.predictions.transform.dense.weight', 'cls.seq_relationship.bias', 'cls.predictions.transform.dense.bias', 'cls.predictions.decoder.weight', 'cls.predictions.transform.LayerNorm.bias', 'cls.seq_relationship.weight', 'cls.predictions.bias', 'cls.predictions.transform.LayerNorm.weight'] |

BertModel -> our model

1 加载transformers中的模型

1 | from transformers import BertPreTrainedModel, BertModel,AutoTokenizer,AutoConfig |

2 基于1中的模型搭建自己的结构