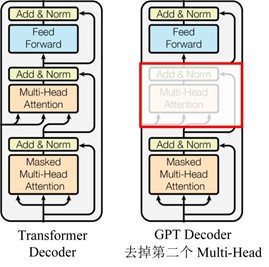

1 主要改动

relying on corrupting the input with masks, BERT neglects dependency between the masked positions and suffers from a pretrain-finetune discrepancy.

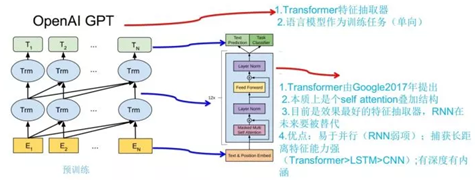

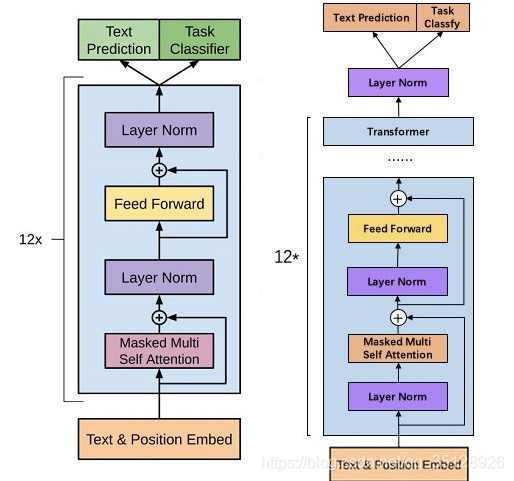

propose XLNet, a generalized autoregressive pretraining method that (1) enables learning bidirectional contexts by maximizing the expected likelihood over all permutations of the factorization order and (2) overcomes the limitations of BERT thanks to its autoregressive formulation. (3) , XLNet integrates ideas from Transformer-XL

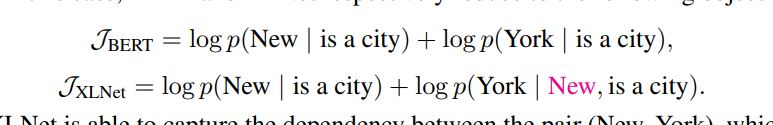

example:[New, York, is, a, city] . select the two tokens [New, York] as the prediction targets and maximize log p (New York | is a city)

In this case, BERT and XLNet respectively reduce to the following objectives:

2 现有PTM的问题

1 AR language modeling

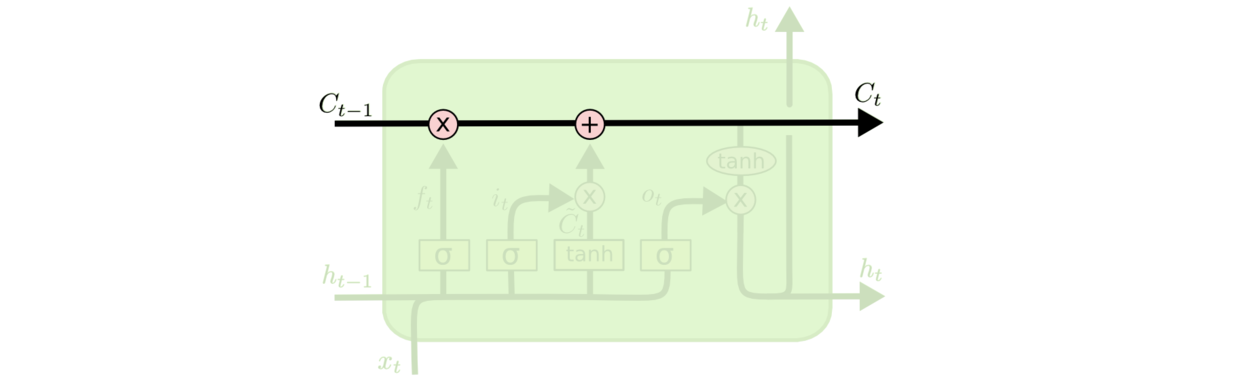

对于给定的句子$\textbf{x}=[x_1,…,x_T]$,AR language modeling performs pretraining by maximizing the likelihood under the forward autoregressive factorization

其中$h_{\theta}(\textbf{x}_{1:t-1})$是考虑上下文的文本表示,$e(x_t)$为$x_t$的词向量

2 AE anguage modeling

对于BERT这种AE模型,首先利用$\textbf{x}$构造遮盖的tokens$\overline{\textbf{x}}$和未遮盖的tokens$\hat{\textbf{x}}$,然后the training objective is to reconstruct $\overline{\textbf{x}}$ from $\hat{\textbf{x}}$:

其中$m_t=1$表示$x_t$被遮盖了,AR语言模型$t$时刻只能看到之前的时刻,因此记号是$h_{\theta}(\textbf{x}_{1:t-1})$;而AE模型可以同时看到整个句子的所有Token,因此记号是$H_{\theta}(\hat{\textbf{x}})_t$

这两个模型的优缺点分别为:

3 对比

1.AE因为遮盖词只是假设相互独立不是严格相互独立,因此为$\approx$。

2.AE在预训练时会出现特殊的token为[MASK],但是它在下游的fine-tuning中不会出现,这就出现了预训练 — finetune的不一致问题。而AR语言模型不会有这个问题。

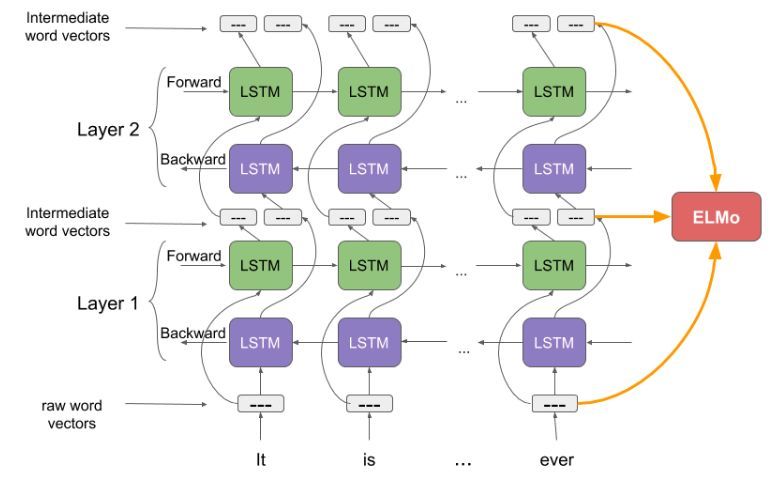

3.AR语言模型只能参考一个方向的上下文,而AE可以参考双向的上下文。

3 改动

3.1 排列语言模型

we propose the permutation language modeling objective that not only retains the benefits of AR models but also allows models to capture bidirectional context

给定长度为$T$的序列,总共有$T!$种排列方法。注意输入顺序是不会变的,因为模型在微调期间只会遇到具有自然顺序的文本序列。作者就是通Attention Mask,把其它没有被选到的单词Mask掉,不让它们在预测单词$x_i$的时候发生作用,看着就类似于把这些被选中的单词放到了上文。

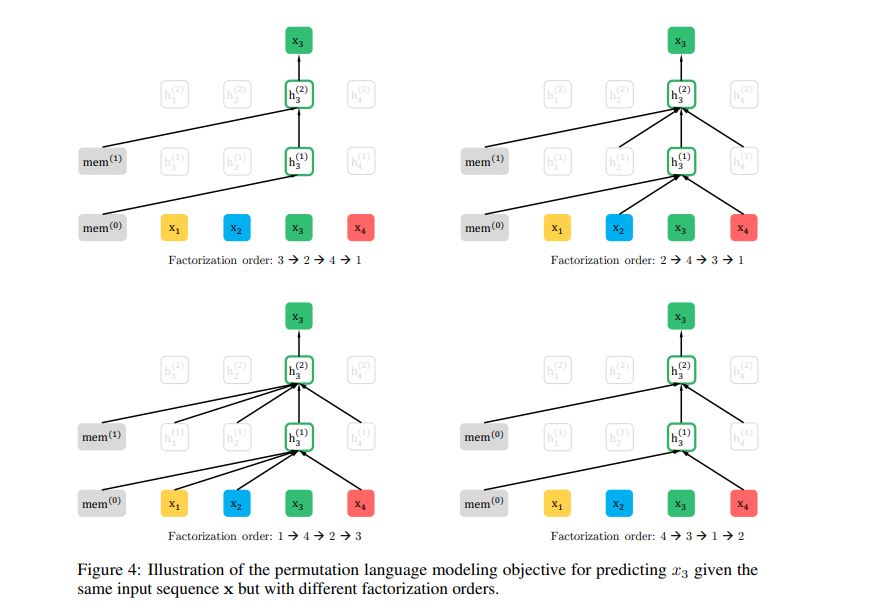

举个例子,如下图,输入序列为$\{x_1,x_2,x_3,x_4\}$,总共有4!,24种情况,作者取了其中4个。假如预测$x_3$,第一个排列为$x_3 \rightarrow x_2 \rightarrow x_4 \rightarrow x_1 $,没有排在$x_3$前面对象,所以只连接了mem,对于真实情况就是输入还是$x_1 \rightarrow x_2 \rightarrow x_3 \rightarrow x_4 $,然后mask掉全部输入,即只利用mem预测$ x_3 $;第二个排列为$x_2 \rightarrow x_4 \rightarrow x_3 \rightarrow x_1 $,$x_2,x_4$排在$x_3$前面,所以连接了$x_2,x_4$对应的向量表示,对于真实情况就是输入还是$x_1 \rightarrow x_2 \rightarrow x_3 \rightarrow x_4 $,然后mask掉$x_1,x_3$,剩余$x_2,x_4$,即利用mem,$x_2,x_4$预测$ x_3 $。

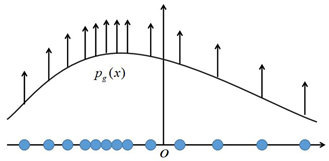

排列语言模型的目标是调整模型参数使得下面的似然概率最大

其中$\textbf{z}$为随机变量,表示某个位置排列,$\mathcal{Z}_T$表示全部的排列,$z_t$,$\textbf{z}_{<t}$分别表示某个位置排列的第$t$个元素和与其挨着的前面$t-1$个元素。

3.2 Two-Stream Self-Attention

Target-Aware Representations

采用AE原来的表达形式来描述下一个token的分布$p_{\theta}(X_{z_t}|\textbf{x}_{\textbf{z}_{<t}})$如下

这样表达有一个问题就是没有考虑预测目标词的位置,即没有考虑$ z_t$,这会导致ambiguity in target prediction。证明如下:假设有两个不同的排列$\textbf{z}^{(1)}$和$\textbf{z}^{(2)}$,并且满足如下关系:

可以推导出

但是$p_{\theta}(X_{z_t^{(1)}}=x|\textbf{x}_{\textbf{z}_{<t}^{(1)}}),p_{\theta}(X_{z_t^{(2)}}=x|\textbf{x}_{\textbf{z}_{<t}^{(2)}})$应该不一样,因为目标词的位置不同

为了解决这个问题,提出了Target-Aware Representations,其实就是考虑了目标词的位置

Two-Stream Self-Attention

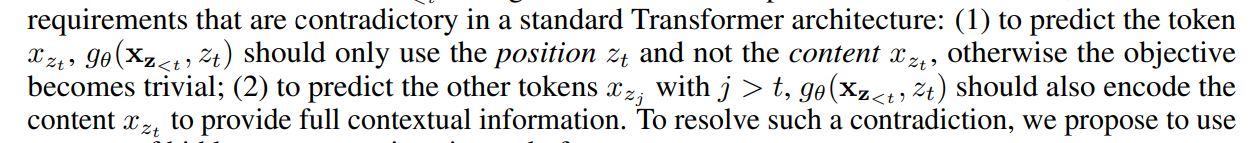

contradiction

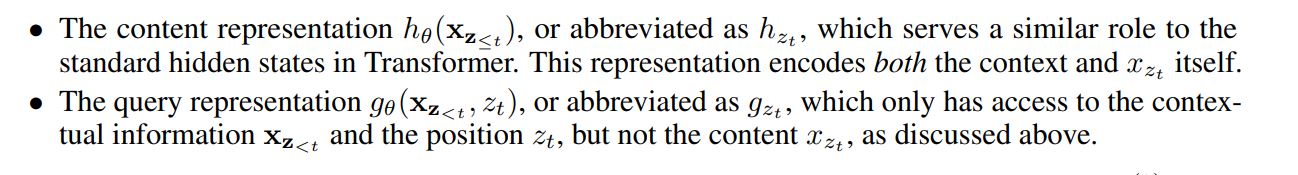

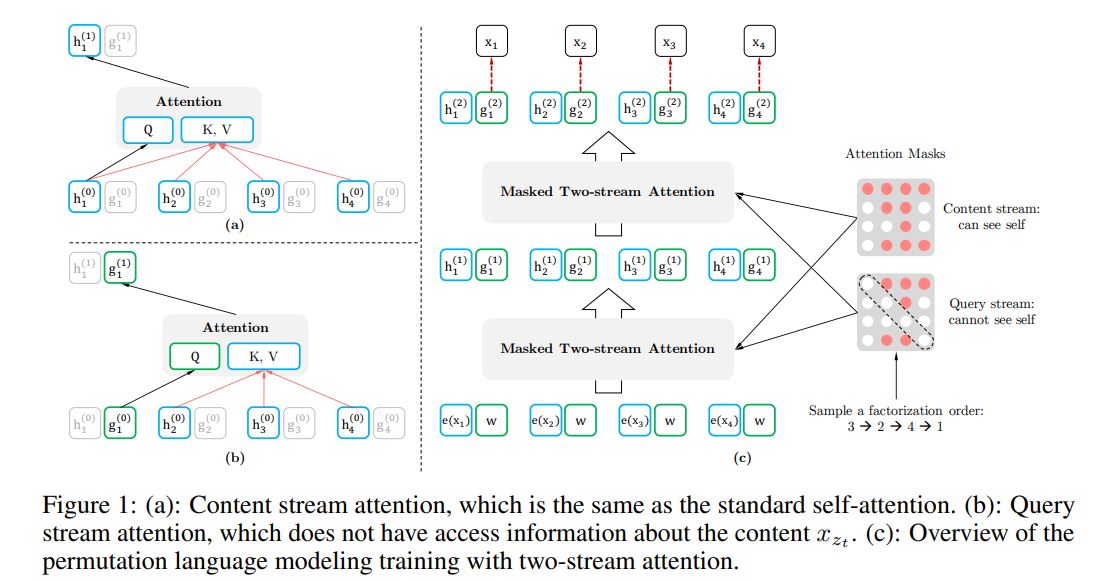

To resolve such a contradiction,we propose to use two sets of hidden representations instead of one:

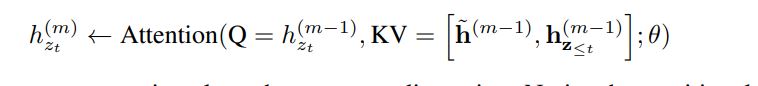

假设有self-attention的层号为$m=1,2,…,M$,$g_i^{(0)}=w$,$h_i^{(0)}=e(x_i)$,Two-Stream Self-Attention可以表示为

举个例子,如下图

预训练最终使用$g_{z_t}^{(M)}$计算公式(4),during finetuning, we can simply drop the query stream and use the content stream

during pretrain, we can use the last-layer query representation $g_{z_t}^{(M)}$ to compute Eq. (4).

during finetuning, we can simply drop the query stream and use the content stream as a normal Transformer(-XL).

3.3 Partial Prediction

因为排序很多,计算量很大,所以需要采样。将$z$分隔成$z_{t_\le c}$和 $z_{t_>c}$,$c$为分隔点,我们选择预测后面的词语,因为后面的词语包含的信息更加丰富。引入超参数$K$调整$c$,使得需要预测$\frac{1}{K}$的词($\frac{|z|-c}{|z|}\approx\frac{1}{K}$),优化目标为:

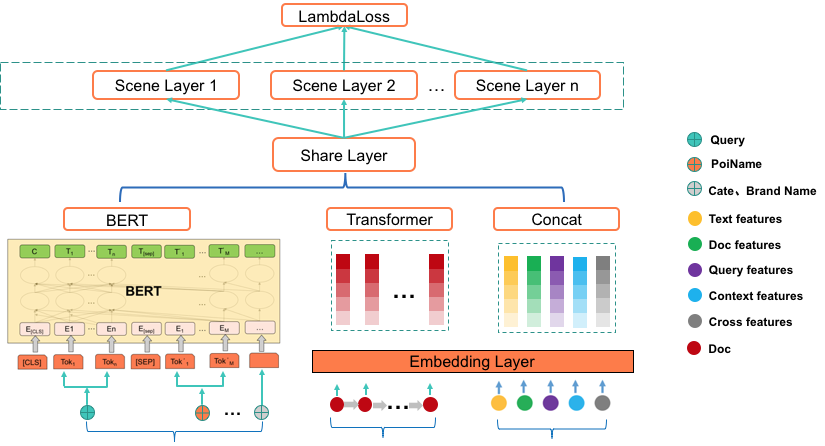

We integrate two important techniques in Transformer-XL, namely the relative positional encoding scheme and the segment recurrence mechanism

Relative Segment Encodings

recurrence mechanism

3.5 Modeling Multiple Segments

the input to our model is the same as BERT: [CLS, A, SEP, B, SEP], where “SEP” and “CLS” are two special symbols and “A” and “B” are the two segments. Although we follow the two-segment data format, XLNet-Large does not use the objective of next sentence prediction

BERT that adds an absolute segment embedding,这里采用Relative Segment Encodings

There are two benefits of using relative segment encodings. First, the inductive bias of relative encodings improves generalization [9]. Second, it opens the possibility of finetuning on tasks that have more than two input segments, which is not possible using absolute segment encodings.

这里有个疑问,对于多于两个seg的情况,比如3个seg,输入格式是否变成[CLS, A, SEP, B, SEP,C,SEP]

参考

https://zhuanlan.zhihu.com/p/107350079

https://blog.csdn.net/weixin_37947156/article/details/93035607

https://www.cnblogs.com/nsw0419/p/12892241.html

https://www.cnblogs.com/mantch/archive/2019/09/30/11611554.html

https://cloud.tencent.com/developer/article/1492776

https://zhuanlan.zhihu.com/p/96023284

https://arxiv.org/pdf/1906.08237.pdf